homelocator

An open-source R package for identifying home locations in human mobility data

Chen, Q., & Poorthuis, A. (2021). Identifying home locations in human mobility data: an open-source R package for comparison and reproducibility. International Journal of Geographical Information Science, 35(7), 1425-1448.

Identifying meaningful locations, such as home or work, from human mobility data has become an increasingly common prerequisite for geographic research. Although location-based services (LBS) and other mobile technology have rapidly grown in recent years, it can be challenging to infer meaningful places from such data, which – compared to conventional datasets – can be devoid of context. Existing approaches are often developed ad-hoc and can lack transparency and reproducibility. To address this, we introduce an R package for inferring home locations from LBS data. The package implements pre-existing algorithms and provides building blocks to make writing algorithmic ‘recipes’ more convenient. We evaluate this approach by analyzing a de-identified LBS dataset from Singapore that aims to balance ethics and privacy with the research goal of identifying meaningful locations. We show that ensemble approaches, combining multiple algorithms, can be especially valuable in this regard as the resulting patterns of inferred home locations closely correlate with the distribution of residential population. We hope this package, and others like it, will contribute to an increase in use and sharing of comparable algorithms, research code and data. This will increase transparency and reproducibility in mobility analyses and further the ongoing discourse around ethical big data research.

Introduction

The analysis of human mobility has become an integral part of a variety of academic (sub-) disciplines. Such analyses can range from understanding commuting flows in cities (e.g. Hincks et al. 2018) to migration flows within and between countries (e.g. Belyi et al. 2017) and have applications in fields beyond Geography that range from urban planning to epidemiology (e.g. Balcan et al. 2009, Maat et al. 2005). Although analyses of human mobility have been conducted for well over a century (cf. Minard’s 1858 map of global migration), they conventionally rely on resource-intensive data sources such as administrative data, census, or manually collected travel surveys. The arrival of the Global Position System (GPS) has opened up a host of new possibilities for mobility analysis by making it more affordable and convenient to collect spatio-temporally precise and accurate mobility data (Kwan 2004), which was soon followed by data derived from mobile phone cell towers (e.g. Ahas et al. 2015). In recent years, the onset of social media platforms and location-based services (LBS), often including a geolocation feature, has created another set of potential data sources for mobility analysis (e.g. Tu et al. 2017).

With the proliferation of mobility data sources also come new challenges. One of the foremost differences between many new data sources and more conventional fieldwork- based data is that there is no explicit or direct interaction between researchers and research subjects. This has several implications, including for privacy and ethics, but also means that it is challenging or even impossible to ask additional questions to a research ‘participant’. Such sources may indeed yield mobility data in the form of precise locations and timestamps but without specific meaning attached to those locations. Social media users rarely publish additional location information (for both technical and privacy reasons), which makes inferences about places relatively challenging. We know when and where a person went. But what were they doing there? Why did they go there? How did they get there? Depending on the research question at hand, the answers to some of these questions can be quite essential. However, new sources of mobility data might not be able to provide these answers because they are, in many ways, devoid of social context.

To address this, a new line of research has developed that is focused on inferring, what Ahas et al. (2010) call, ‘meaningful’ locations from mobility data. The most obvious example of such a location is the place of a person’s home or residence, but this notion can be extended to work, recreation, and other types of locations. In the last 10 years, a myriad of different approaches to inferring meaningful locations has been developed, sometimes as the main impetus for methodological articles (e.g. Ahas et al. 2010, Cheng et al. 2010, McGee et al. 2013) as well as a means-to-an-end in empirical analyses of human mobility (e.g. Huang and Wong 2016, Siła-Nowicka et al. 2016, Xin and MacEachren 2020).

Although similarities between approaches do exist, a consensus or single best approach has not emerged in the current state-of-the-art. What’s more, the actual algorithms used are not always discussed in detail within publications and the source code is rarely released publicly, which makes comparing algorithms as well as reproducing results all the more challenging. This is further complicated by the fact that a ground-truth for the evaluation of the inference process often simply does not exist and mobility data itself is rarely made available to the larger research community because of both technical, ethical and social reasons. Together, this has resulted in a situation where an increasing number of papers use a process of location inference that is implemented with unique, custom algorithms that are difficult to subsequently evaluate or re-use by the larger research community.

To alleviate this issue, we developed an R package named ‘homelocator’ aimed at bringing consistency and replicability to the domain of inferring meaningful locations. The package allows users to write structured, algorithmic ‘recipes’ or use existing, built-in recipes to identify meaningful locations in LBS data and enables the comparison across different algorithms on the same dataset. To contextualize this work, we first conduct a broad review of the different existing approaches for identifying locations and show how the current state-of-the-art relies on different, often bespoke solutions. We then discuss the approach and design of the R package and its implementation of several pre-existing algorithms. To provide an empirical evaluation, we describe an aggregated and de-identified LBS dataset, consisting of data from Singapore sent between 2012 and 2016. The dataset is made available in conjunction with the R package to provide a baseline for a fair and quantitative comparison across algorithms and approaches. We illustrate our approach by applying four existing algorithms on this dataset and highlight the affordances provided by a subsequent ensemble approach, which enhances the reliability of the inferred meaningful locations.

homelocator package

The package can be installed in R using the following command:

install_github("spatialnetworkslab/homelocator")

Specifically, the package has four built-in "recipes". The default "recipe" is called HMLC, which weights data points across multiple time frames to "score" potentially meaningful locations. The other three "recipes" are named OSNA, APDM and FREQ.

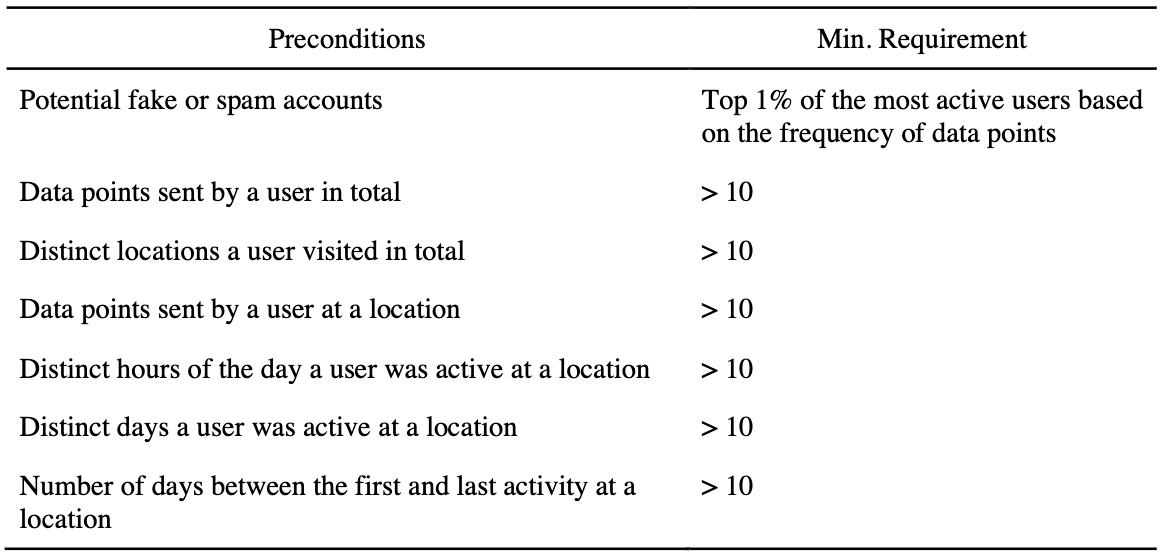

Take the HMLC recipe as an example, there are a list of pre-conditions are used for filtering "meaningful" locations (see Fig. 1 (left)), but the thresholds for each condition are tuneable based on specific research questions. After this initial filtering phase, the algorithm uses a "scoring" phase to determine the most likely home location (see Fig. 1 (Right)).

In addition, the package allows for the customization of "recipes", or writing them completely from scratch. Many of the existing algorithms in the literature can be expressed through a set of basic building blocks, implemented as various key functions.

-

validate_dataset: validates the required variables are present in the input dataset. This is a useful first step, especially in the context of reproducible workflows with different input datasets. -

enrich_timestamp: derives additional variables from timestamp column (e.g., ‘weekday’, ‘hour of the day’) that are often used as intermediate variables in home location algorithms. - A set of functions that make it easier to operate on “nested” data. Nesting data (i.e., the storage of an entire table inside of a column) is a common procedure in the

tidyverseapproach to data manipulation. For location inference, it is often necessary to operate on doubly nested data as analysis is needed per person, per location. To aid the mental gymnastics required for this, we provide nested versions of common tidyverse functions such asmutateandsummarise, as well as functions specific to location inference such asscore_nested, which allows the user to perform a scoring phase, after the initial filtering. Nesting has an aditional advantage as it allows for the subsequent parallel processing of data, which can dramatically speed up computing time. - Finally, a function named

extract_locationextracts the most likely home location for each user based on the filtering and scoring in the previous steps.

More details about the package and its source code can be accessed as a GitHub repository. Documentation for the functions of the package and a tutorial on its use can be found on its documentation website.

Data and Implementation

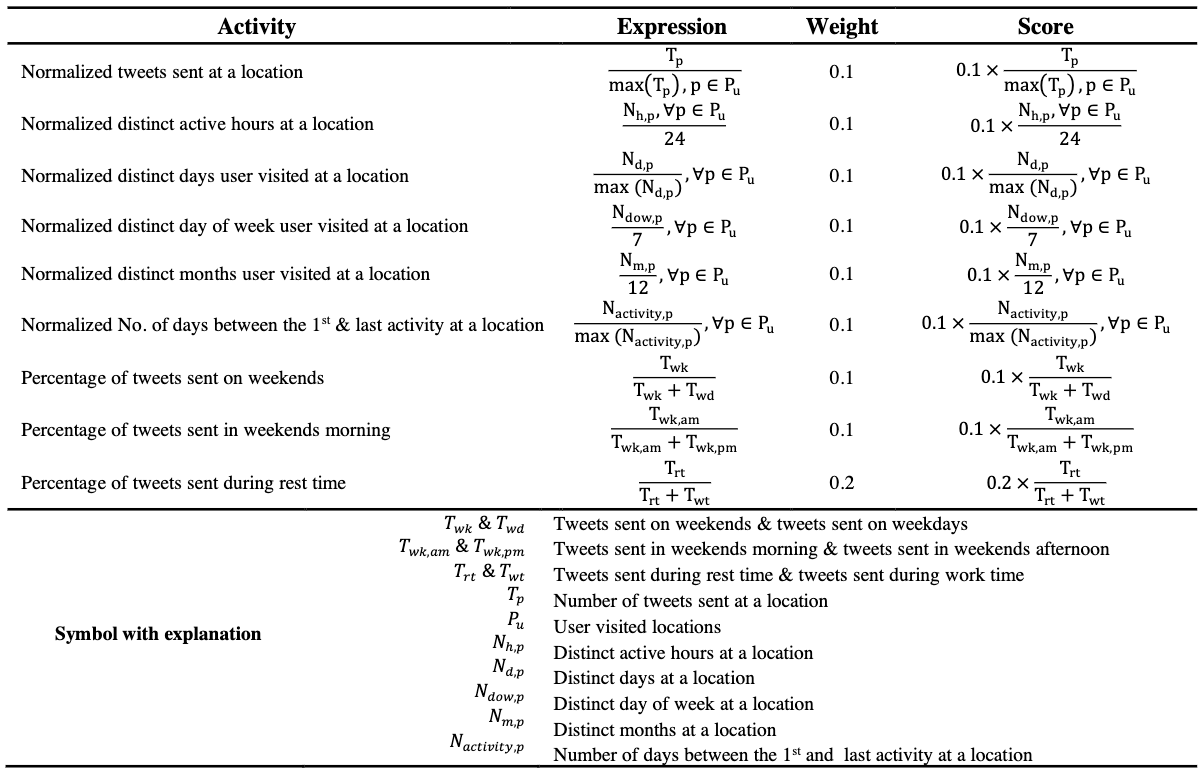

To illustrate and evaluate the package and proposed approach, we use a data repository of all geotagged tweets sent worldwide, derived from DOLLY project (Poorthuis and Zook 2017). We extract all geotagged tweets sent in Singapore from 2012 to 2016, which consists of around 22.4 million geotagged messages sent by approximately 405.6k users. We de-identify and aggregate this dataset, while balancing with the ultimate aim of inferring meaningful locations. This procedure is summarized in Figure 2. Specifically, we only retain a (randomly generated) user ID, a location attribute, and a date/time attribute while any other variables such as content of tweet, user description, etc are removed from the source data. We also remove users with too few data points, as well as those with so many data points that they are likely to be bots. Subsequently, for each user, a 5% portion of their tweets is randomly swapped with other users, effectively introducing a small amount of noise.

The next step consists of both temporal masking (timestamps are shifted by a random number of minutes – and the day of the week is swapped with a similar day (weekday or weekend)) and geomasking (locations are offset by a random distance < 100 m). We also aggregate locations to 750 m hexagonal grid cells. These aggregated 750 m hexagonal grid cells are used as `locations’ in the remainder of the paper. This aggregation resolution is used as a sensible resolution for the Singapore case, but it is important to emphasize that the size of the grid cell is an open choice for researchers depending on their specific research questions.

Finally, locations visited by fewer than 5 people, or users with inferred homes at a location that have fewer than 5 inferred residential locations are also removed to prevent re-identification. This final aggregated and de-identified dataset consists of around 20.1 (89.5% of original dataset) million data points created by approximately 130.3k (32.13% of original dataset) users. To aid in subsequent apples-to-apples comparison across algorithms and approaches, this aggregated and de-identified dataset is made publicly available in conjunction with the package.

Privacy and Ethics

Social media and LBS have become a mainstay in many millions of people’s lives, creating detailed datasets of their spatio-temporal footprints in the process. While this has enabled a plethora of social and spatial research, this technology (and its use by researchers) also has specific privacy and ethical consequences.

Considerations for privacy have a longer history in GIScience, for example considering the trade-offs between the accuracy of an analysis and maintaining a certain degree of privacy for research participants (Kwan et al. 2006, Olson et al. 2006). Point data, even when only disclosed on published maps, can often be reverse engineered (Curtis et al. 2006, Kounadi and Leitner 2014). To alleviate this, geomasking (or perturbing) techniques can be applied to such data (Swanlund et al. 2020) but LBS provide additional challenges to privacy as they can contain more precise, expansive, and linkable data than conventional point data sets (Gao et al. 2019). De-anonymization and re-identification are very serious concerns. As such, with the proliferation of these platforms, the salience and specific consideration of geoprivacy have also risen (Keßler and McKenzie 2018).

As Elwood and Leszczynski (2011) already pointed out a decade ago, privacy on such platforms is never binary but always negotiated – with distinct potential differences between a person’s expected and actual privacy. This makes charting an appropriate, ethical course for researchers in this domain certainly not trivial. In this work, we take inspiration from Zook et al.’s (2017) ‘ten simple rules for responsible big data research’. These rules (e.g. ‘guard against re-identification’, ‘debate the tough, ethical choices’) are by no means simple – especially not when considering the trade-offs between maintaining the privacy and the accuracy and usefulness of the subsequent analysis – but provide a useful, albeit general foundation.

Beyond privacy, there is also a broader ethical question to consider. First, for conventional social science research, clear ethical standards exist, including the concept of informed consent. Although many big data sets are technically ‘public’, this does not mean users have given their explicit and informed consent to be included in such data, let alone for its use in a myriad of research projects (cf. Zimmer’s (2018) discussion of the Kirkegaard OKCupid data release). As a post-hoc consent process might not (always) be trivial (Norval and Henderson 2017), this is an additional reason to pay careful attention to the ethics, potential consequences and harm that a dataset or analysis can result in. Second, there are significant issues around access to LBS datasets. While some data is available publicly, companies increasingly put walls around this data (Longley and Adnan 2016, Lovelace et al. 2016) and limit access to company employees or simply to those who pay for data products – often unbeknownst to the people contained within such data (Thompson and Warzel 2019). As Taylor (2016) aptly illustrates in the case of West African mobile phone data, who has access, and how, are important questions with complicated answers, especially as increasing datafication has intensified the connection between data and capitalism (Sadowski 2019).

It is clear that any researcher working with LBS data will have to consciously navigate these issues. An important example of what can go wrong otherwise is provided by Prabhu and Birhane (2020) in their thorough analysis of the large ImageNet dataset, which is relatively foundational in the field of computer vision. They lay bare how significant issues surrounding the sourcing of the data, its labelling and its subsequent use in AI models bring harm to several social groups. Such harm could also happen in the creation and use of LBS datasets, especially when we consider the process of inferring meaningful locations, which can be sensitive.

Although no one-size-fits-all solution exists, we proceed based on the following principles:

-

Spatial aggregation: for many geographic research questions and analyses, it is not necessary to know precise locations. Aggregation to the largest spatial unit that still allows for answering the question at hand should be conducted. For example, in this work we aggregate to 750-m hexagons.

-

Limiting data use: many research ultimately does not need exact data on individual users but is focused on larger patterns and processes instead. Following practices common in statistics agencies, any data on locations with only a few linked users is removed. Along the same lines, inferring meaningful locations based on a person’s friends or the implicit content of their messages is far removed from the original intent with which data is shared. For this reason, we focus only on timestamp and location information.

-

Perturbation and random noise: to further prevent re-identification or the subsequent linking with other datasets, the only two data points used (location and timestamp) are randomly perturbed and random noise is added through swapping data points between users to provide an additional layer of de-identification and plausible deniability.

Unfortunately, protecting privacy and performing failsafe de-identification is still an open problem without a clear silver-bullet solution (Fung et al. 2010, Fiore et al. 2020). This is especially poignant for what is often referred to as ‘micro’ trajectory data, which consists of precise spatio-temporal data points collected at consistent intervals, collected through GPS or mobile phone data (cf. Thompson and Warzel 2019). Pseudonymizing and geomasking alon (Fiore et al. 2020), or even aggregation (de Montjoye et al. 2013) might not be enough to prevent a re-identification ‘attack’ on such granular data.

The LBS dataset used in this work does not have the same level of granularity as micro trajectory data. In contrast it generally covers only several dozen to several hundred data points per user over a period of 5 years. Nonetheless, to minimize any potential harm, we have combined all of the aforementioned steps (aggregation, limiting variables, spatio- temporal perturbation, random noise) to create a de-identified dataset.

Results and Discussion

We use the de-identified dataset to evaluate the three existing algorithms, in addition to our own algorithm. Computation is carried out on a MacBook Pro with 2.3 GHz Dual-Core Intel Core i5 and 16 GB Memory. To speed up computing and make processing large datasets possible, each algorithm is processed in parallel (2 cores). Of course computing time will differ based on the complexity of the algorithm and the size of the dataset. For reference, the HMLC algorithm takes ~2.8 h to process the 20.1 million records in the dataset, while it only takes ~1.9 h for the FREQ approach.

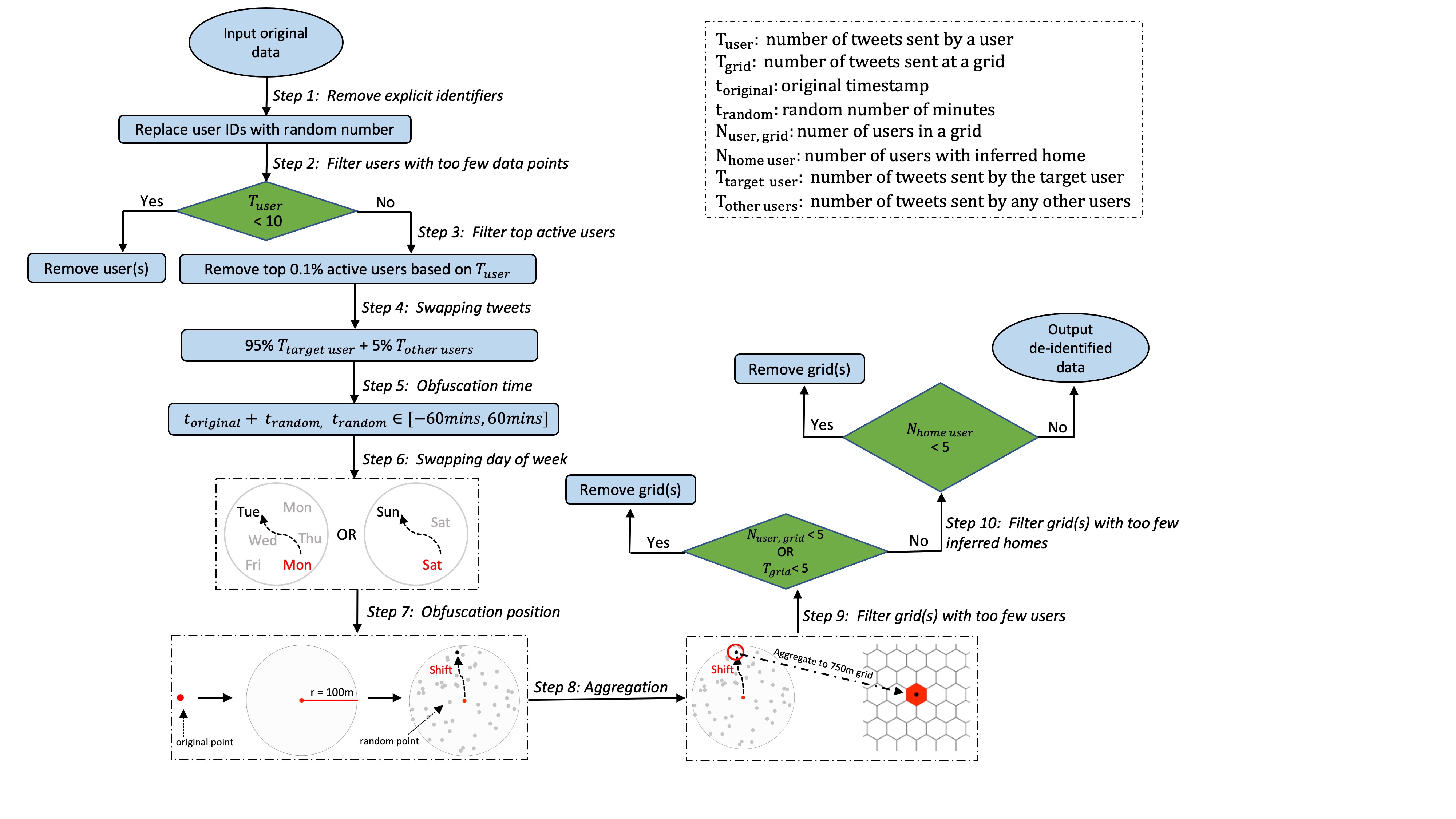

As Figure 3 clearly shows, different algorithms may yield very different results. We also see clear differences in how many users an algorithm can infer a location for. For example, OSNA identifies a home location for 89.1% of all users. However, the spatial distribution of those users deviates quite a bit from the other three approaches. One possible reason for this deviation is that the OSNA algorithm is less `strict’ and includes users with relatively few data points that are filtered out in the other approaches. Our own approach –HMLC is relatively close to the ‘APMD’ approach and identifies only ~25.7% of all users. These two algorithms show relatively similar spatial patterns. There are 28,007 (21.5%) users that have been assigned a location by all four algorithms, which we refer to as 'shared’ users. Even though the approaches are quite distinct, 21,863 (78.1% of 28,007) of these shared users get assigned the same home location in all four different approaches.

In this way, the use of the package also opens the door to the adoption of ensemble methods, especially in contexts where prevention of false positives is important. An ensemble method can apply different algorithms on the same dataset and only extract the inferred location for a user when the results of all algorithms match each other.